Overview

This dataset contains 121,190 thermal videos, from The Cacophony Project. The Cacophony Project focuses on monitoring native wildlife of New Zealand, with a particular emphasis on detecting and understanding the behaviour of invasive predators such as possums, rodents, cats, hedgehogs, and mustelids. The goal of this work is to conserve and restore native wildlife by eliminating these invasive species from the wild.

The videos were captured across various regions of New Zealand, mostly at night. Video capture was triggered by a change in incident heat; approximately 24,000 videos are labeled as false positives. Labels are provided for 45 categories; the most common (other than false positives) are “bird”, “rodent”, and “possum”. A full list of labels and the number of videos associated with each label is available here (csv).

Benchmark results

Benchmark results on this dataset, with instructions for reproducing those results, are available here.Citation, license, and contact information

For questions about this dataset, contact coredev@cacophony.org.nz at The Cacophony Project.

This data set is released under the Community Data License Agreement (permissive variant).

No citation is required, but if you find this dataset useful, or you just want to support technology that is contributing to the conservation of New Zealand’s native wildlife, consider donating to The Cacophony Project.

Data format

If you prefer tinkering with a notebook to reading documentation, you may prefer to ignore this section, and go right to the sample notebook.

Three versions of each video are available:

- An mp4 video, in which the background has been estimated as the median of the clip, and each frame represents the deviation from that background. This video is referred to as the “filtered” video in the metadata described below. We think this is what most ML folks will want to use. This is not a perfectly faithful representation of the original data, since we’ve lost information about absolute temperature, and median-filtering isn’t a perfect way to estimate background. Nonetheless, this is probably easiest to work with for 99% of ML applications. (example background-subtracted video)

- An mp4 video, in which each frame has been independently normalized. This captures the gestalt of the scene a little better, e.g. you can generally pick out trees. (example non-background-subtracted video)

- An HDF file representing the original thermal data, including – in some cases – metadata that allows the reconstruction of absolute temperature. More complicated to work with, but totally faithful to what came off of the sensor. These files are also more than ten times larger (totaling around 500GB, whereas the compressed mp4’s add up to around 35GB), so they are not included in the big zipfile below. We don’t expect most ML use cases to need these files.

The metadata file (.json) describes the annotations associated with each video. Annotators were not labeling whole videos with each tag, they were labeling “tracks”, which are individual sequences of continuous movement within a video. So in some cases, for example, a single cat might move through the video, disappear behind a tree, and re-appear, in which case the metadata will contain two tracks with the “cat” label. The metadata contains the start/stop frames of each track and the trajectories of those tracks. Tracks are based on thresholding and clustering approaches, i.e., the tracks are themselves machine-generated, but they are generally quite reliable (other than some false positives), and the main focus of this dataset is the classification of tracks and pixels (both movement trajectory and thermal appearance can contribute to species identification), rather than improving tracking.

More specifically the main metadata file contains everything about each clip except the track coordinates, which would make the metadata file very large. Each clip also has its own .json metadata file, which has all the information available in the main metadata file about that clip, as well as the track coordinates.

Clip metadata is stored as a dictionary with an “info” field containing general information about the dataset, and a “clips” field with all the information about the videos. The “clips” field is a list of dicts, each element corresponding to a video, with fields:

- filtered_video_filename: the filename of the mp4 video that has been background-subtracted

- video_filename: the filename of the mp4 video in which each frame has been independently normalized

- hdf_filename: the filename of the HDF file with raw thermal data

- metadata_filename: the filename of the .json file with the same data included in this clip’s dictionary in the main metadata file, plus the track coordinates

- width, height

- frame_rate: always 9

- error: usually None; a few HDF files were corrupted, but are kept in the metadata for book-keeping purposes, in which case *only* this field and the HDF filename are populated

- labels: a list of unique labels appearing in this video; technically redundant with information in the “tracks” field, but makes it easier to find videos of a particular category

- location: a unique identifier for the location (camera) from which this video was collected

- id: a unique identifier for this video

- calibration_frames: if non-empty, a list of frame indices in which the camera was self-calibrating; data may be less reliable during these intervals

- tracks: a list of annotated tracks, each of which is a dict with fields “start_frame”, “end_frame”, “points”, and “tags”. “tags” is a list of dicts with fields “label” and “confidence”. All tags were reviewed by humans, so the “confidence” value is mostly a remnant of an AI model that was used as part of the labeling process, and these values may or may not carry meaningful information. “points” is a list of (x,y,frame) triplets. x and y are in pixels, with the origin at the upper-left of the video. As per above, the “points” array is only available in the individual-clip metadata files, not the main metadata file.

That was a lot. tl;dr: check out the sample notebook.

A recommended train/test split is available here. The train/test split is based on location IDs, but the splits are provided at the level of clip IDs. For a few categories where only a very small number of examples exist, those examples were divided across the splits (so a small number of locations appear in both train and test, but only for a couple of categories).

The format of the HDF files is described here. The script used to convert the HDF files to json/mp4 is available here.

Downloading the data

The main metadata, individual clip metadata, and mp4 videos are all available in a single zipfile:

Download metadata, mp4 videos from GCP (33GB)

Download metadata, mp4 videos from AWS (33GB)

Download metadata, mp4 videos from Azure (33GB)

If you just want to browse the main metadata file, it’s available separately at:

metadata (5MB)

The HDF files are not available as a zipfile, but are available in the following cloud storage folders:

- gs://public-datasets-lila/nz-thermal/hdf (GCP)

- s3://us-west-2.opendata.source.coop/agentmorris/lila-wildlife/nz-thermal/hdf (AWS)

- https://lilawildlife.blob.core.windows.net/lila-wildlife/nz-thermal/hdf (Azure)

The unzipped mp4 files are available in the following cloud storage folders:

- gs://public-datasets-lila/nz-thermal/videos (GCP)

- s3://us-west-2.opendata.source.coop/agentmorris/lila-wildlife/nz-thermal/videos (AWS)

- https://lilawildlife.blob.core.windows.net/lila-wildlife/nz-thermal/videos (Azure)

For example, the clip with ID 1486055 is available at the following URLs (the non-background-subtracted mp4, background-subtracted mp4, and HDF file, respectively):

- https://storage.googleapis.com/public-datasets-lila/nz-thermal/videos/1486055.mp4

- https://storage.googleapis.com/public-datasets-lila/nz-thermal/videos/1486055_filtered.mp4

- https://storage.googleapis.com/public-datasets-lila/nz-thermal/hdf/1486055.hdf5

…or the following gs URLs (for use with, e.g., gsutil):

- gs://public-datasets-lila/nz-thermal/videos/1486055.mp4

- gs://public-datasets-lila/nz-thermal/videos/1486055_filtered.mp4

- gs://public-datasets-lila/nz-thermal/hdf/1486055.hdf5

Having trouble downloading? Check out our FAQ.

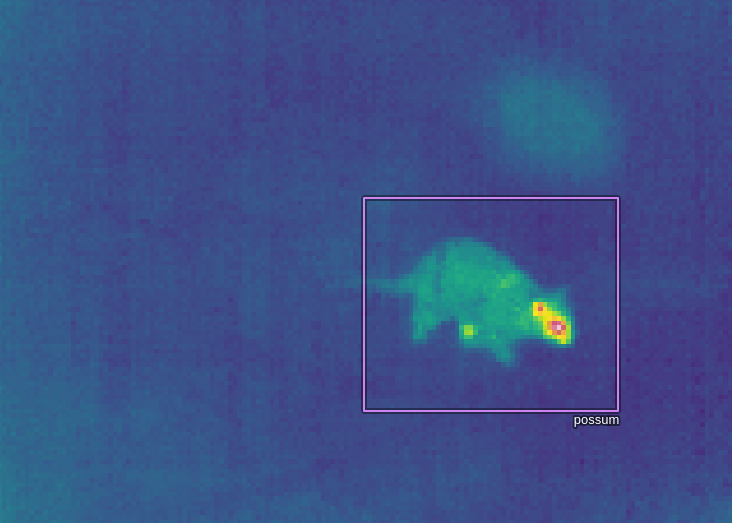

Neat thermal camera trap image

Posted by Dan Morris.